base02_Transformer

base02_Transformer

Encoder

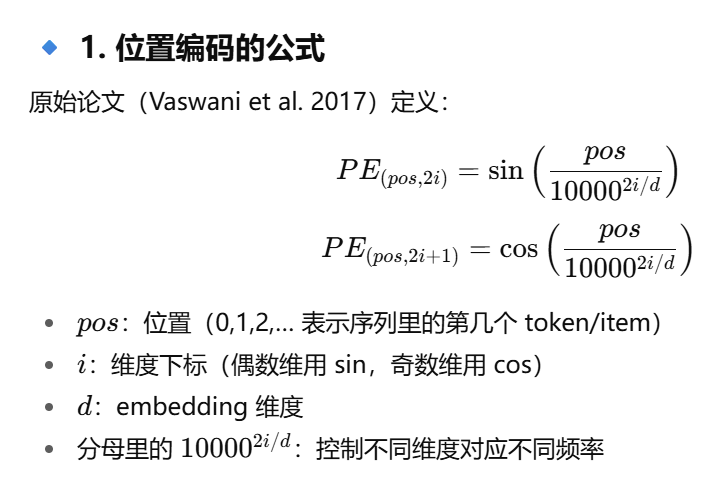

位置编码

函数示意图:

word embedding 与对应位置函数值相加,得到位置编码

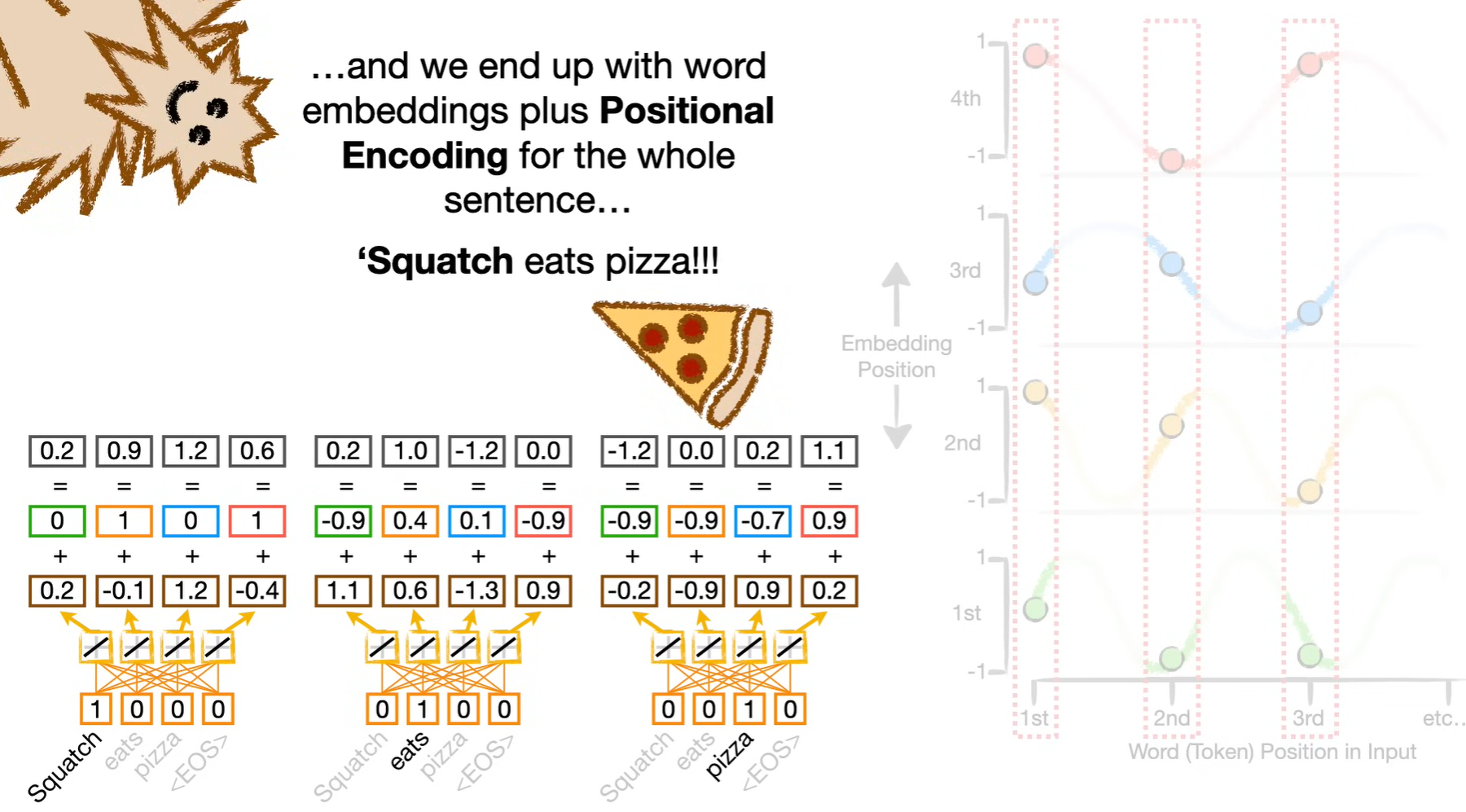

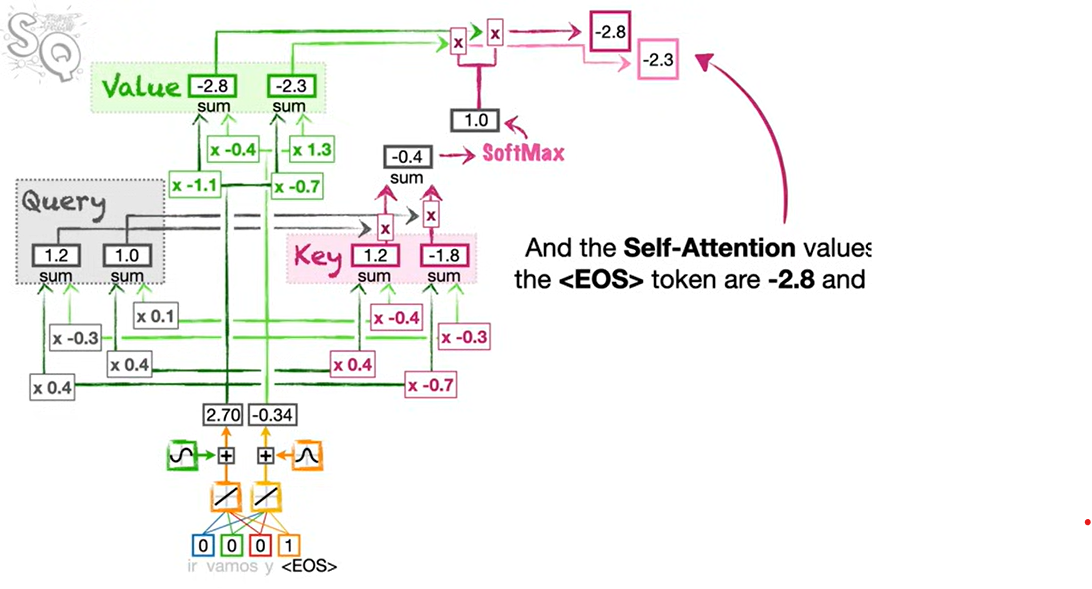

Self-Attention

- 计算Q, K, V时,对于不同token,权重相同

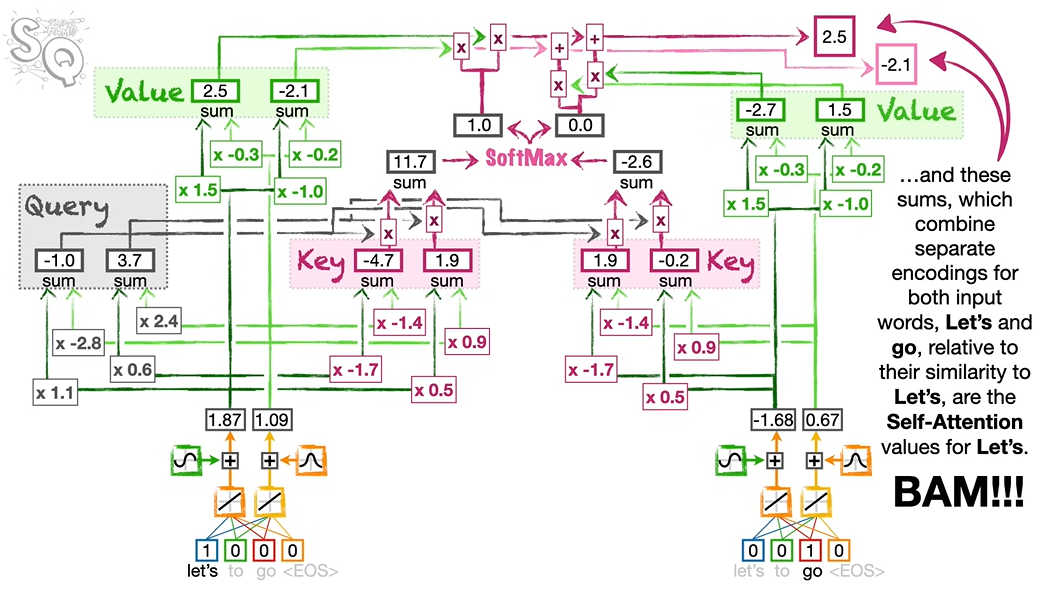

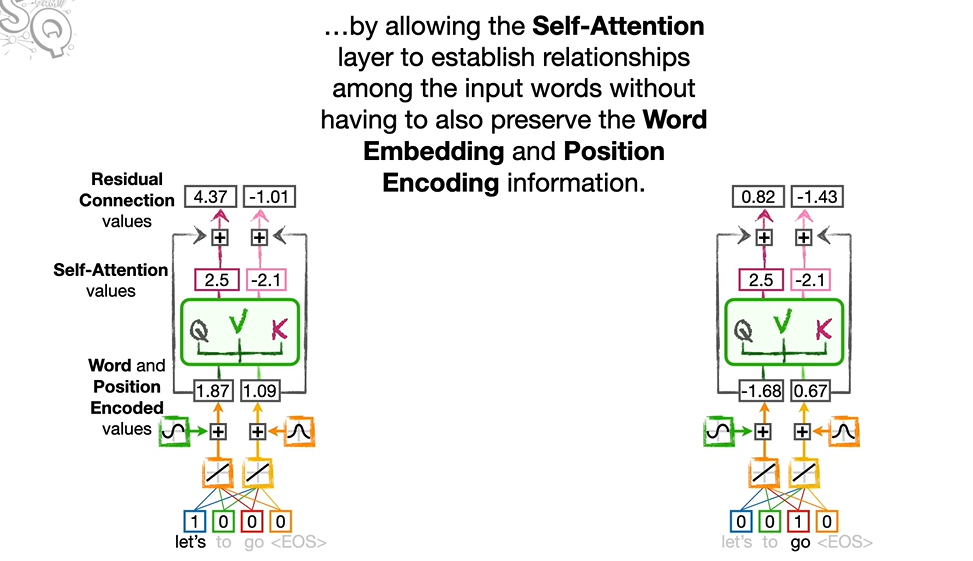

Residual Connection

- 能够快速的并行训练

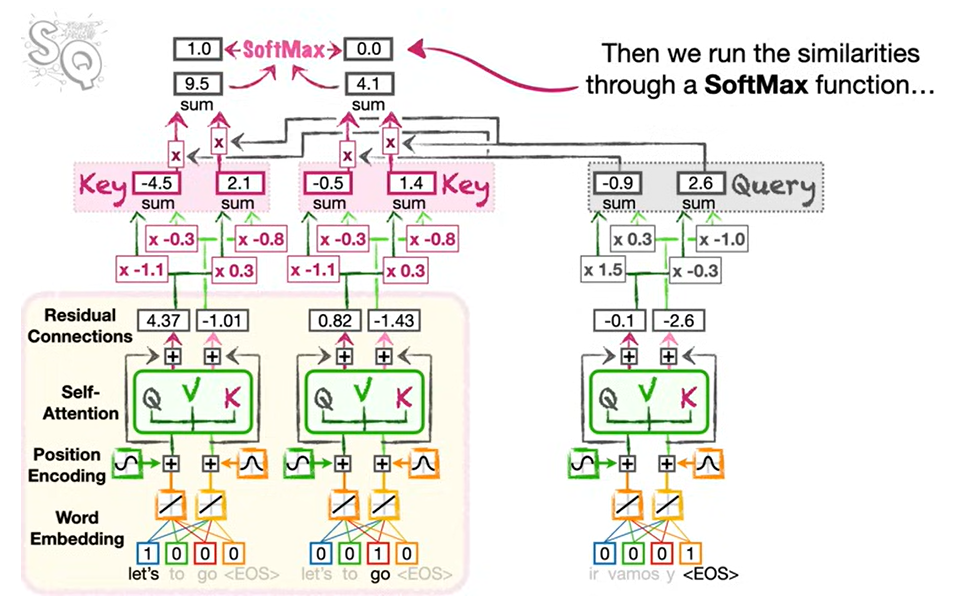

Decoder

- self-attention部分同Encoder;

- 计算Q,K,V时,与Encoder的权重不同;

This post is licensed under CC BY 4.0 by the author.